安装Ceph软件包

集成过程中,需要在OpenStack的controller节点和compute节点安装Ceph软件包,作为Ceph客户端。

- 在controller和compute节点配置ceph镜像源。

1vim /etc/yum.repos.d/ceph.repo

并加入以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26

[Ceph] name=Ceph packages for $basearch baseurl=http://download.ceph.com/rpm-nautilus/el7/$basearch enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc priority=1 [Ceph-noarch] name=Ceph noarch packages baseurl=http://download.ceph.com/rpm-nautilus/el7/noarch enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc priority=1 [Ceph-source] name=Ceph source packages baseurl=http://download.ceph.com/rpm-nautilus/el7/SRPMS enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc priority=1

- 在controller和compute节点更新yum源。

1yum clean all && yum makecache - 在controller和compute节点安装ceph软件包。

1yum -y install ceph ceph-radosgw

配置Ceph环境

- 在ceph1创建所需的存储池,修改PG数目。

1 2 3 4

ceph osd pool create volumes 32 ceph osd pool create images 32 ceph osd pool create backups 32 ceph osd pool create vms 32

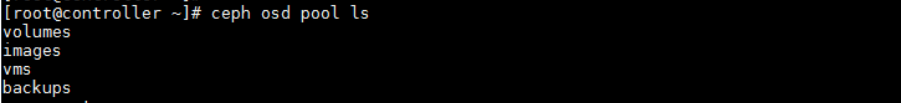

- 查看创建的存储池。

1ceph osd pool ls

- 在ceph1同步配置文件,将ceph1上的配置文件同步到controller和compute节点。

1 2

cd /etc/ceph ceph-deploy --overwrite-conf admin ceph1 controller compute - 在ceph1上为cinder、glance、cinder-backup用户创建keyring,允许其访问Ceph存储池。

1 2 3 4 5 6 7 8 9 10

ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images' ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=images' ceph auth get-or-create client.cinder-backup mon 'profile rbd' osd 'profile rbd pool=backups' ceph auth get-or-create client.glance | ssh controller tee /etc/ceph/ceph.client.glance.keyring ssh controller chown glance:glance /etc/ceph/ceph.client.glance.keyring ceph auth get-or-create client.cinder | ssh compute tee /etc/ceph/ceph.client.cinder.keyring ssh compute chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring ceph auth get-or-create client.cinder-backup | ssh compute tee /etc/ceph/ceph.client.cinder-backup.keyring ssh compute chown cinder:cinder /etc/ceph/ceph.client.cinder-backup.keyring ceph auth get-key client.cinder | ssh compute tee client.cinder.key

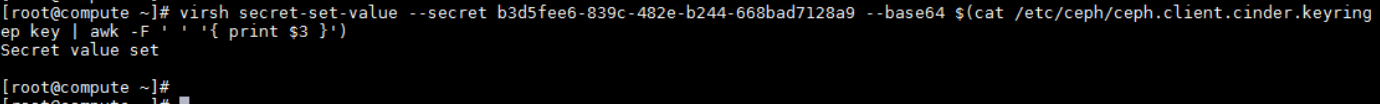

- 在计算节点(compute)上向libvirt添加秘钥。

1 2

UUID=$(uuidgen) cat > secret.xml <<EOF

1 2 3 4 5 6 7

<secret ephemeral='no' private='no'> <uuid>${UUID}</uuid> <usage type='ceph'> <name>client.cinder secret</name> </usage> </secret> EOF

1 2

virsh secret-define --file secret.xml virsh secret-set-value --secret ${UUID} --base64 $(cat /etc/ceph/ceph.client.cinder.keyring | grep key | awk -F ' ' '{ print $3 }')

注意保存此处生成的UUID的值,后面Cinder以及Nova的配置中需要用到,本示例中的UUID为:b3d5fee6-839c-482e-b244-668bad7128a9

配置Glance集成Ceph

- 在控制节点(controller)上修改Glance的配置文件。

1vim /etc/glance/glance-api.conf

在配置里添加以下内容:

1 2 3 4 5 6 7 8 9 10 11 12

[DEFAULT] ... # enable COW cloning of images show_image_direct_url = True ... [glance_store] stores = rbd default_store = rbd rbd_store_pool = images rbd_store_user = glance rbd_store_ceph_conf = /etc/ceph/ceph.conf rbd_store_chunk_size = 8

- 为避免images缓存在目录“/var/lib/glance/image-cache”下,修改文件“/etc/glance/glance-api.conf”,添加以下内容。

1 2

[paste_deploy] flavor = keystone

- 在控制节点(controller)重启glance-api服务。

1systemctl restart openstack-glance-api.service

配置Cinder集成Ceph

- 在Cinder节点(compute)上,修改配置文件“/etc/cinder/cinder.conf”。

1vim /etc/cinder/cinder.conf

修改并新增以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

[DEFAULT] ... #enabled_backends = lvm #注意:需要注释掉Cinder中lvm的配置 enabled_backends = ceph [ceph] volume_driver = cinder.volume.drivers.rbd.RBDDriver volume_backend_name = ceph rbd_pool = volumes rbd_ceph_conf = /etc/ceph/ceph.conf rbd_flatten_volume_from_snapshot = false rbd_max_clone_depth = 5 rbd_store_chunk_size = 4 rados_connect_timeout = -1 glance_api_version = 2 rbd_user = cinder rbd_secret_uuid = b3d5fee6-839c-482e-b244-668bad7128a9

- 在Cinder节点(compute)上,重启cinder-volume服务。

1systemctl restart openstack-cinder-volume.service

配置Cinder_backup集成Ceph

- 在cinder节点(compute)上,修改配置文件“/etc/cinder/cinder.conf”。

1vim /etc/cinder/cinder.conf

修改并新增以下内容:

1 2 3 4 5 6 7 8 9 10 11 12

[DEFAULT] ... #backup_driver = cinder.backup.drivers.swift.SwiftBackupDriver ... backup_driver = cinder.backup.drivers.ceph.CephBackupDriver backup_ceph_conf = /etc/ceph/ceph.conf backup_ceph_user = cinder-backup backup_ceph_chunk_size = 4194304 backup_ceph_pool = backups backup_ceph_stripe_unit = 0 backup_ceph_stripe_count = 0 restore_discard_excess_bytes = true

在配置参数back_driver时需要注释掉其他backup_driver的配置。

- 重启cinder backup服务进程。

1systemctl restart openstack-cinder-backup.service

配置Nova集成Ceph

- 在Nova计算节点(compute)上,修改配置文件“/etc/nova/nova.conf”。

1vim /etc/nova/nova.conf

修改并新增以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13

[DEFAULT] ... live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_PERSIST_DEST,VIR_MIGRATE_TUNNELLED" [libvirt] ... virt_type = kvm images_type = rbd images_rbd_pool = vms images_rbd_ceph_conf = /etc/ceph/ceph.conf disk_cachemodes="network=writeback" rbd_user = cinder rbd_secret_uuid = b3d5fee6-839c-482e-b244-668bad7128a9

- 在Nova计算节点(compute)上,重启nova-compute服务。

1systemctl restart openstack-nova-compute.service